Beginner's Tutorial: Automatically back up data under VPS and upload it to FTP

|

1. Installation of crontab To use the crontab function of the VPS, you may need to install it, ssh in: 2. Install the Email sending component 1. Install the Email component under CentOS 3. Use automatic backup script Script content: !/bin/bashStart your edit here MYSQL_USER=root #mysql username Your edit ends hereDefine the name of the database and the name of the old database DataBakName=Data_$(date +”%Y%m%d”).tar.gz Delete local data older than 3 days rm -rf /home/backup/Data_$(date -d -3day +"%Y%m%d").tar.gz /home/backup/Web_$(date -d -3day +”%Y%m%d”).tar.gz Export database, one database one compressed file /usr/local/mysql/bin/mysql -u$MYSQL_USER -p$MYSQL_PASS -B -N -e 'SHOW DATABASES' | xargs > mysqldata Compress the database file into one file tar zcf /home/backup/$DataBakName /home/backup/*.sql.gz Send the database to Email. If the database is too large after compression, please comment this lineecho "Subject: Database backup" | mutt -a /home/backup/$DataBakName -s "Content: Database backup" $MAIL_TO Compress website datatar zcf /home/backup/$WebBakName $WEB_DATA Upload to FTP space, delete data from FTP space 5 days ago ftp -v -n $FTP_IP $FILE via: Automatic backup script |

<<: Free SSL and Cheap SSL Certificates

>>: Comcure: Provide 2G website backup space

Recommend

PulseHeberg: French VPS, 40% off limited time offer, 2GB RAM, NVMe hard drive, 100Mbps-1Gbps bandwidth, unlimited traffic, 2.4 euros per month

PulseHeberg, founded in 2012, is a French hosting...

servaRICA: $48/year/4 cores/4GB memory/200GB SSD space/unlimited traffic/100Mbps port/Xen/Canada

servaRICA is a Canadian hosting company founded i...

First Root: €2.69/month/512MB RAM/50GB storage/10TB bandwidth/KVM/Germany

First Root is a German hosting provider and a for...

$6.49/month/25G space/350G traffic virtual host - HostMantis

HostMantis is an American hosting company that st...

Uptime Robot: Free website monitoring, supports 50 sites, once every 5 minutes

Uptime Robot, founded in 2010, mainly provides we...

Vultr: $5/month/1 CPU/768MB memory/15GB SSD/400GB traffic/KVM/Japan

Vultr, an American hosting company, is a world-re...

50Server: 200 yuan/month/D525/4GB memory/320GB hard disk/unlimited traffic/Hong Kong

50Server, a Chinese hosting provider, mainly prov...

[Black Friday] StarryDNS: Hong Kong/Japan Softbank/Singapore/Los Angeles CN2 and other KVM VPS, 1GB RAM, $36 per year

StarryDNS, a Hong Kong merchant, has been introdu...

WebHorizon: £5.5/year/AMD EPYC/256MB memory/10GB space/250GB traffic/1Gbps port/OpenVZ 7/KVM/Singapore/Japan, etc.

WebHorizon, an Indian merchant, was established n...

KTS24: €5/first year/1 core/1GB memory/10GB SSD space/unlimited traffic/1Gbps port/KVM/Netherlands

KTS24 or GT-Host, haendler (same company, just di...

Tutorial on installing VNC service on OpenVZ VPS

Under SSH, it is best to be the root user. Assume...

GeekStorage launches 50% discount code

GeekStorage has been introduced in the previous a...

How do I subscribe to the blog?

Most blogs or websites have RSS function, so why ...

servaRICA 4 cores 6GB memory 4TB space 100Mbps unlimited traffic Canada Xen VPS review

Details : servaRICA: $11/month/4 cores/6GB memory...

HostKvm: 54.4 yuan/month/2GB memory/30GB space/500GB traffic/80Mbps/KVM/Hong Kong Cera

HostKvm is a stable Chinese merchant. It has been...

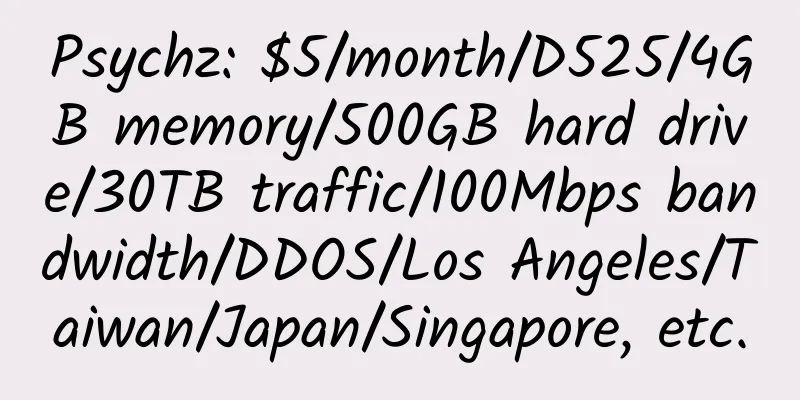

![[Black Friday] Psychz: $24/month/E3-1230v2/16GB RAM/1TB HDD/100TB traffic/1Gbps bandwidth/DDOS/Los Angeles](/upload/images/67c9e10462d85.webp)